post by Callum Berger (2021 cohort)

1. Placement at the Virtual and Immersive Production Studio

I started my placement back in July 2023, working with my industry partner AlbinoMosquito Productions Ltd run by Richard and Rachel Ramchurn based at the Virtual and Immersive Production (VIP) Studio at King’s Meadow Campus. The VIP studio houses a series of high-end technologies for artists and developers to use in creating projects and artwork. Technologies include motion capture, volumetric capture, pepper’s ghost holograms, tesla suits, and virtual reality (VR) headsets. Being one of the only studios to allow this range within the midlands, this was a great opportunity for me to work alongside other creators to gain experience into design and creation using a variety of technologies.

Throughout the first month of the placement, I worked alongside Richard on a series of different development projects, including working with artists to capture their performances. These performances ranged from tightrope artists to dancers with disabilities. It was incredible to see the range of diversity that these technologies allowed artists to express themselves.

2. Filming a Virtual Reality Experience

As the placement progressed into the second month, I began working with Richard to develop a virtual reality experience using volumetric capture. This project, funded by Arts Council England, aimed to produce an experience that took an audience through a futuristic Nottingham engulfed in a climate crisis, raising discourse with the audience around the current situation of the climate that we live in today. Planning for this project had begun in the previous year when Richard, Rachel, and myself looked into potential applications of a VR experience that was adaptive using brain data as a real-time input. Having decided on a climate crisis experience and the basis for this, we used the placement as an opportunity to bring this to life.

Working alongside AlbinoMosquito for the creation of a VR climate experience presents a series of challenges and opportunities, including moving from 2D to 3D space, digital environments, presentation of actors, and user interaction. Going beyond 2D film into a 3D space requires an awareness of visual surroundings. Users within the experience will have a 360-degree view of the environment, and therefore capturing actors and environments within a single camera viewpoint alone is not enough.

We decided to split the experience into a series of different scenes that would allow you to embody an individual from their perspective and witness different events unfold over time. The journey of the experience begins with a series of refugees moving to a camp within Nottingham, before engaging in a series of dialogue around concerns for their safety before tragedy befalls the group. I worked loosely with the writers for this, helping shape the direction toward more fearful aspects, in hopes of using this experience as part of my PhD.

Once the scripts were complete, we began casting for the experience, focusing on local actors to support the growth of the industry within Nottingham and surrounding areas. Casting involved potential actors sending in short demo tapes of themselves playing the role of a particular character they had been selected to act. From there, we made final decisions on who would be cast in the experience and were ready to move on to filming.

2.1 Filming with Volumetric Capture

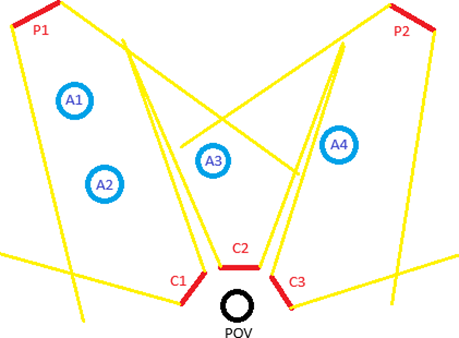

Through testing, it was shown that fewer cameras used for each render produced a smaller workload and storage space requirement for the use of the captures. Therefore, Richard and I settled on ensuring that each scene used the fewest amount of cameras possible for each capture. The fewest cameras were decided on a trial and error approach that tested each scene setup and would determine the minimum amount of cameras necessary to capture all actors within the scene. This approach involved placing cameras that captured actors from the viewpoint of the user as shown in Figure 1. As the experience embodies the viewer in a point-of-view (POV) position, actors needed to be aware of where this virtual camera would be throughout filming. Therefore, we placed an ambisonic microphone in the position of the POV, allowing the actors to work around the space with awareness of the viewer’s location.

However, this presented an issue with calibration, as to set up the 3D space to be captured, cameras are required to be calibrated with each other which involves the need for overlapping space between cameras. To overcome this, pairing cameras were used so that calibration could be done. This allowed the calibration to work but now with the requirement of more cameras within a scene. Having returned to our previous predicament of too many cameras, I developed a program within Unity that would allow the removal of any chosen cameras from a recording. This took the metadata of a volumetric capture as input, calculated which cameras were used, and displayed them to the user. This then allowed us to remove any pairing cameras not needed for the final render and would output a new metadata file that stored this altered capture as a new capture that could be rendered with the new camera setup.

Filming took place over a week. Using the limited budget we had to ensure that we minimised travel and accommodation for actors where possible, utilising every day to capture all scenes for when actors were in. This meant planning which scenes were filmed each day and how we could split scenes up based on separate dialogues. After finishing filming, we were able to work on bringing the volumetric captures into a virtual world and building the environments around the actors.

3. Post-production of the Virtual Reality Experience

The final month of my placement involved bringing all the captures into Unity and working alongside a 3D graphics artist, Sumit Sarkar, and a sound artist, Gary Naylor, to bring the experience to life. Although we are still adding to and refining the experience, we have a complete draft and have begun screening this experience for audiences. The screenshots below show examples of the experience using the captures. We are now in the process of preparing this experience to be screened at film festivals in the summer.

The placement allowed me to work in situations and on projects I’ve never had the chance to work with and has been invaluable to my PhD experience. I want to give a huge thank you to Richard and Rachel for their work throughout the placement, as well as all others involved with the projects to help me get the best out of my placement at the VIP studio.