post by Favour Borokini (2022 cohort)

From June 25th – July 15th, 2023, I attended the 6th annual Diverse Intelligences Summer Institute (DISI) Summer School at the University of St Andrews. The institute aims to foster interdisciplinary collaborations about how intelligence is expressed in humans, non-human animals, and artificial intelligence (AI), among others.

I was excited to attend the Summer Institute due to my interest in AI ethics from an African and feminist perspective. My current PhD research focuses on the potential affordances and challenges avatars pose to African women. As AI is now often implicated in the creation of digital images, I thought DISI was a great environment to share ideas and insight into how to conceptualise these challenges and opportunities.

The attendees were divided into two groups: Fellows and Storytellers. Fellows were mostly early career researchers from diverse fields, such as cognitive science, computer science, ethnography, and philosophy. The Storytellers were artists who created or told stories and had in their number an opera singer, a dancer, a weaver, a sci-fi author, a sound engineer and many others. The Storytellers brought spontaneity and life to what would surely have been a dreary three weeks with their creativity and their ability to spur unselfconscious expression in all the participants.

DISI 2023 began on a rainy evening, the first of several such rainy days, with an icebreaker designed to get Fellows and Storytellers to get to know each other. In the following days, we received a series of engaging lectures on topics as varied as brain evolution in foxes and dogs, extraterrestrial intelligence, psychosis and shared reality and the role of the arts in visualising conservation science. A typical summer school day had two ninety-minute lectures punctuated by two short recesses and a longer lunch break.

The lecture on Psychosis and Shared Reality was given by Professor Paul Fletcher, a Professor of Neuroscience from the University of Cambridge who had advised the development team of Hellblade, a multi-award-winning video game that vividly portrayed mental illness. This game put me in mind of several similar research projects ongoing at the CDT researching gaming and the mind. As a Nigerian, I reflected on the framing of psychosis and mental illness in my culture and the non-Western ways these ailments were treated and addressed. That first week, I was quite startled to find that two people I had spoken casually with at dinner and on my way to St Andrew’s were Faculty members. One of these was Dr. Zoe Sadokierski, an Associate Professor in Visual Communication at the University of Technology, Sydney, Australia, who gave a riveting lecture on visualising the cultural dimensions of conservation science using participatory methods.

In that first week, we were informed that we would all be working on at least one project, two at the most (more unofficially), and there was a pitching session over the course of two afternoons. I pitched two projects: The first project was to explore the aspirations, fears and hopes of my fellow participants using the Story Completion method, a qualitative research method with roots in Psychology, in which a researcher elicits fictional narratives from their participants using a brief prompt called a stem. This method helps participants discuss sensitive, controversial subjects by constructing a story told from the point of view of a stranger.

Many of the stories were entertaining and wildly imaginative, but I was particularly struck by the recurring anxiety that in 2073, the beautiful city of St Andrews would be submerged due to rising water levels. This seemed to me a reflection of how attached we had all become to that historic city, how attachment to places and things can come to help us care more.

For my second project, I and two friends (pictured below) interviewed six of our fellow DISI attendees for a podcast titled A Primatologist, a Cognitive Scientist and a Philosopher Walk into a(n Intergalactic) Bar. The idea was to get artists and researchers to tell an ignorant but curious alien on a flying turtle planet called Edna about their work and the Earth. These interviews sparked amazingly unintended reflective conversations about the nature of life on earth, our relationship with nature and human values, such as honesty. On the final day, we put together an audio trailer for some of the most insightful parts of these conversations as our final presentation.

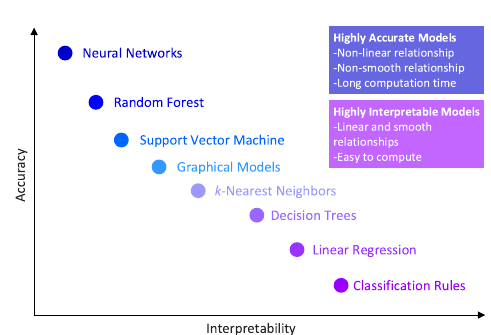

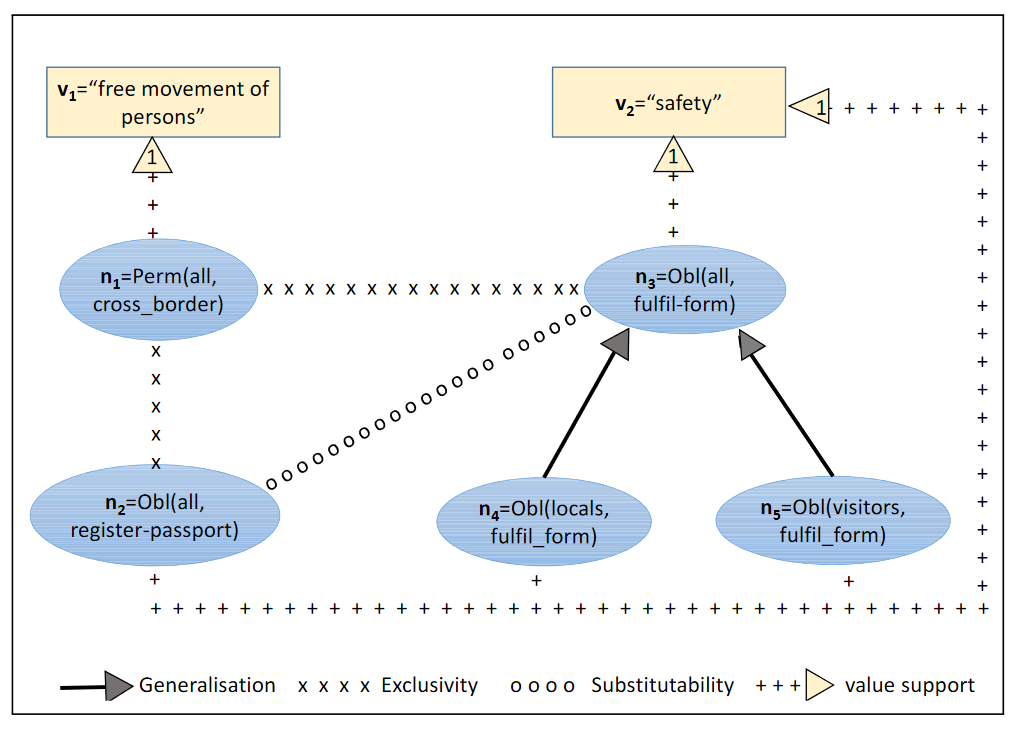

Prone to being critical, I often felt disconcerted by what I perceived as an absence of emphasis on ethics. Having worked in technology ethics and policy, I felt prodded to question the impact and source of a lot of what I heard. In a session on the invisibility of technology, I felt extremely disturbed by the idea that good technology should be invisible. In fact, I felt that invisibility, the sort of melding into perception described as embodiment by postphenomenology, spoke more to efficiency than “good”, bearing in mind use cases such as surveillance.

There were some heated conversations, too, like the one on eugenics and scientific ethics in research. The question was how members of the public were expected to trust scientists if scientists felt ethically compelled not to carry out certain types of research or to withhold sensitive findings obtained during their research.

And the session on questioning the decline in “high-risk, high-return research”, which seemed, unsurprisingly, focused on research within the sciences, led to comments on funding cuts for social sciences, arts and the humanities resulting from the characterisation of these fields as low-risk and low-return, causing me to reflect that, ironically, the precarity of the latter, qualified them more as tagged high-risk, at least, if not high-return.

But the summer school wasn’t all lectures; and there were numerous other activities, including zoo and botanical garden trips, aquarium visits, beach walks, forest bathing and salons. During one such salon, we witnessed rousing performances from the storytellers amongst us in dance, music, literature and other forms of art.

I also joined a late evening expedition to listen to bats, organised by Antoine, one of my co-podcast partners. There was something sacred about walking in the shoes of the bats that evening as we blindfolded ourselves and relied on our partners to lead us in the dark with only the sense of touch, stumbling, as a small river rushed past.

I think the process of actually speaking with my fellow attendees caused me to feel warm towards them and their research. I believe ethics is always subjective, and our predisposition and social contexts impact what we view as ethical. At DISI, I found that ethics can be a journey, as I discovered unethical twists in my perspective.

This thawing made me enjoy DISI more, even as I confirmed that I enjoy solitary, rarefied retreats. As the final day drew near, I felt quite connected to several people and had made a few friends, who I knew, like the rarefied air, I would miss.

The success of DISI is in no small part due to the effort of the admin team, Erica Cartmill, Jacob Foster, Kensy Cooperrider, and Amanda McAlpin-Costa. Our feedback was constantly solicited, and they were quite open about the changes from last year.

I had a secret motive for attending. My research’s central focus is no longer AI, and I felt very out of place not having something I thought was core to the theme. But a conversation with Sofiia Rappe, a postdoctoral Philosophy and Linguistics Fellow, led to the realisation that the ability and desire to shapeshift is itself a manifestation of intelligence – one modelled in many non-human animals, reflecting awareness and cognition about how one fits in and how one should or ought to navigate their physical and social environment.

I look forward to returning someday.

You can listen to our podcast here: SpaceBar_Podcast – Trailer