post by Jimiama Mafeni Mase (2018 cohort)

I participated in Not-Equal Summer School, a virtual summer school about social justice and digital economy. The summer school ran from the 7th of June to the 11th of June. It was designed to equip participants with tools to understand and support social justice in this digital economy. Participants were grouped into teams according to their research or career interests (i.e. urban environment, health & care, eco workers & labour, public services, and education & technology), to explore existing and emerging technologies and examine how power and social justice evolve with these technologies.

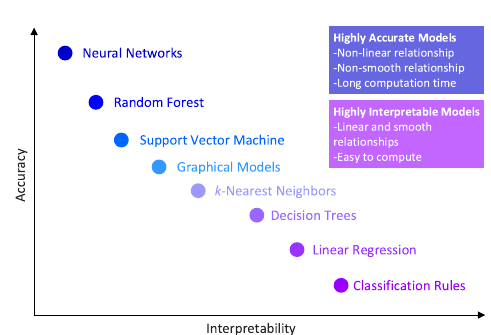

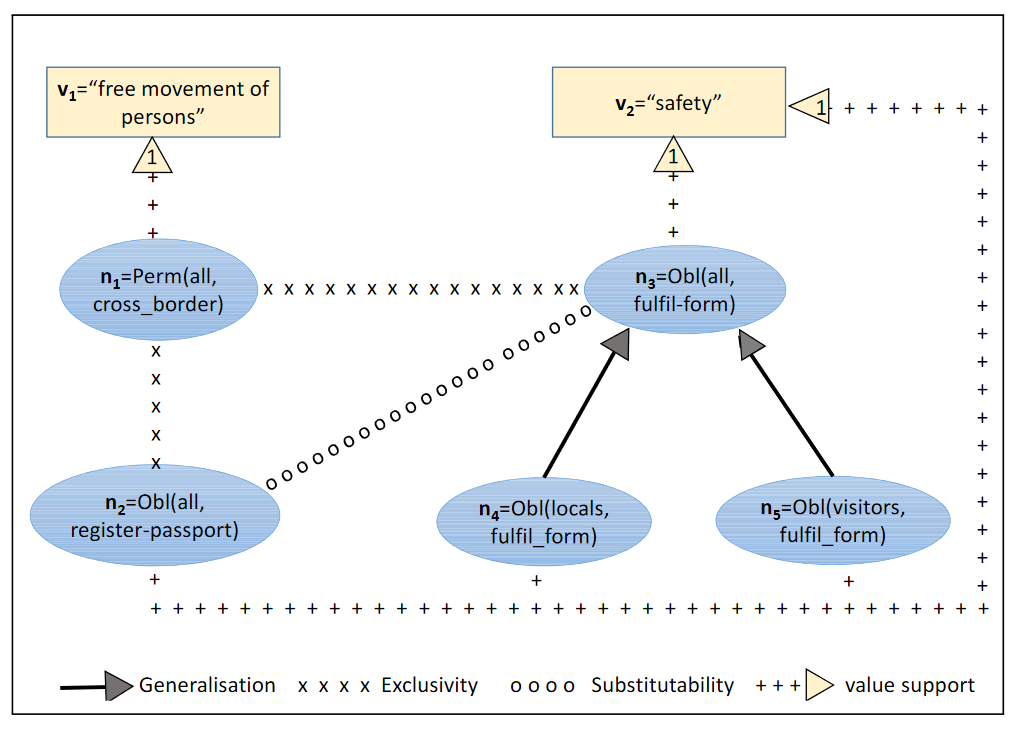

The first day was simply an introduction with a talk about the evolution of social justice in digital economies and machine learning. The key speakers presented some major topics about the use of Artificial Intelligence (AI) systems for social justice such as the relational structure of AI, the AI modelling pipeline, and the influence of AI and Big Data on human rights. A common issue among the talks was around machine learning model predictions’ interpretability, reliability and bias, and the complexity of the data used to train the models as data are usually collected at different stages in the pipeline.

The second day consisted of workshops that explored the use of gestures to express the consequences of power and social injustice in our work places, research and lives. Later, the identified gestures were used to propose designs of new utopian technologies. My team proposed a recruitment technology that considers hope, transparency and fairness as candidates usually face racial, gender and age bias and discrimination. Our gesture was ‘finger crossed’, which signified our hope for a recruitment technology that will be fair and transparent in its recruitment process. The day ended with an interesting webinar about human and collaborative work-practices of data science to improve social justice in AI.

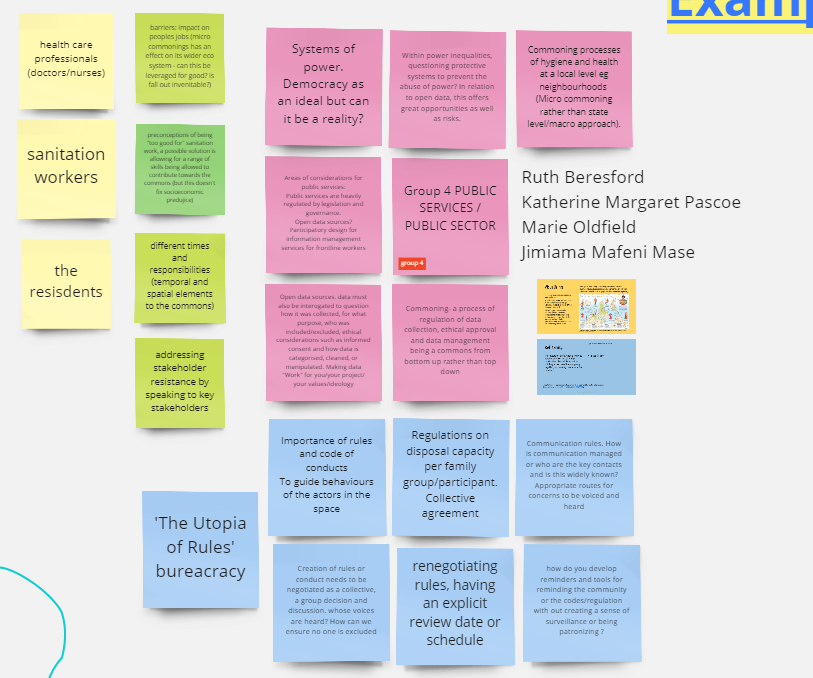

Digital commons was the topic of Day 3. Commoning is the collective and collaborative governance of material resources and shared knowledge. During the day, we were required to define a common in our areas of interest. My team examined commoning of hygiene and health at the community level. We identified key actors in this space as health care professionals, sanitation workers, and residents. We identified key barriers in the implementation of such a common such as the impact of different jobs and responsibilities, different working schedules, and partnerships with external stakeholders e.g. the city council or NHS. We concluded the workshops by presenting ways of ensuring the success of our proposed digital commons, such as, creating rules and procedures to guide the behaviours of the actors, and emphasised that the rules need to be collectively developed. The day ended with a webinar about making data work for social justice.

The themes of the fourth day were systems change & power dynamics, and working culture. We explored the challenges and opportunities in working cultures and power dynamics to support social justice. Key challenges identified were working with senior stakeholders, managing external partners, limited funds and budget, project deadlines, and resource availability. Later, we discussed methods of improving working cultures and power dynamics such as bringing stakeholders together, confidence to speak up, adopt perspectives that do not necessarily come in research, creating allies, and rapid prototyping. We also proposed that institutions introduce power dynamics and working cultures training courses. The day ended with a webinar about using imagination and storytelling for social transformations and social movements. The speakers emphasised the importance of visualising the kind of futures we want or imagine.

The summer school finished with two intensive workshops about ‘design fiction’. That is, research and prototyping design fiction methods for the digital world to envision socially just futures. My team focused on a design fiction for the community, where members of the community could have equal opportunities to care, knowledge, and support with the use of community cobots. The cobots will act upon encrypted information with no personal data, to assist members of the community. We imagined such a cobot will not have access to any personal or individual information, and all members will have equal rights and responses from the cobot. These utopian brainstorming and imagination workshops were a great way to close the summer school. During the last hours of the day, we shared our thoughts about the summer school and each participant was asked to summarise their experience with three words. My words were ‘collaboration’, ‘fairness’ and ‘power’.

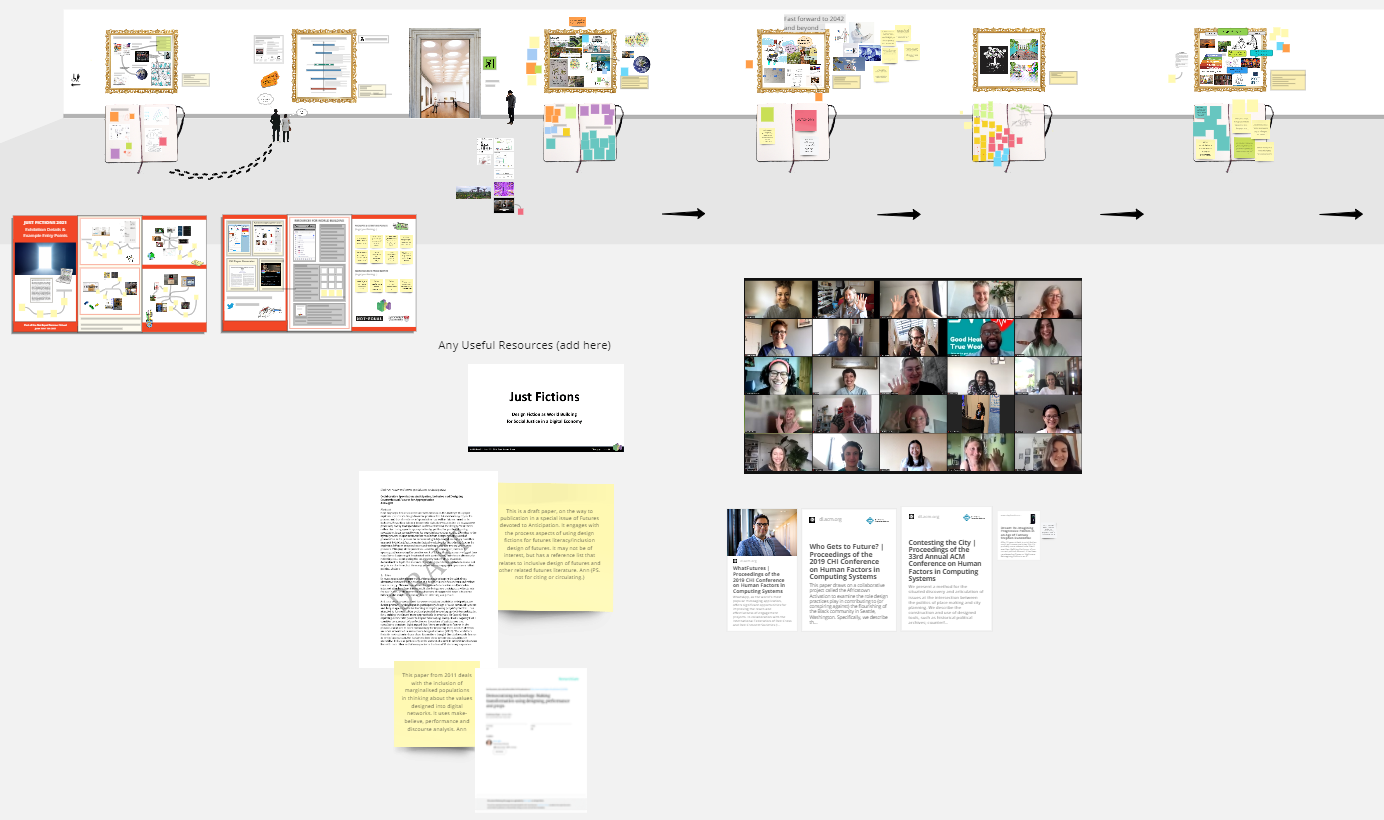

It is important to mention that we used Miro throughout the summer school. Miro is a whiteboard and visual collaborative online platform for remote team collaboration. It was my first encounter with the platform but familiarising myself with it was not difficult.