Royal United Services Institute (RUSI): Trusting Machines? Cross-sector lessons from Healthcare and Security, 30 June – 2 July 2021

post by Kathryn Baguley (2020 cohort)

Overview of event

The event ran 14 sessions over three days which meant that the time passed quickly. The variety was incredible, with presenters from varied multidisciplinary backgrounds and many different ways of presenting. It was great to see so many professionals with contrasting opinions getting together and challenging each other and the audience.

Why this event?

My interest in this conference stemmed from the involvement of my industry partner, the Trustworthy Autonomous Systems hub (TAS), and wanting to hear the speaker contributions by academics at the University of Nottingham and the Horizon CDT. The conference focus on security and healthcare sectors was outside my usual work, so I thought the sessions would provide new things for me to consider. I was particularly interested in gaining insights to help me decide on some case studies and get some ideas on incorporating the ‘trust’ element into my research.

Learnings from sessions

Owing to the number of sessions, I have grouped my learnings by category:

The dramatic and thought-provoking use of the arts

I had never considered the possibilities and effects of using the arts as a lens for AI, even as a long-standing amateur musician. This is a point I will carry forward, maybe not so much for my PhD but training and embedding in my consultancy work.

The work of the TAS hub

It was great to learn more about my industry partner, particularly its interest in health and security. I can now build this into my thoughts on choosing two further case studies for my research. Reflecting on the conference, I am making enquiries with the NHS AI Lab Virtual Hub to see whether there are relevant case studies for my research.

Looking at the possible interactions of the human and the machine

I believe overall, in a good way, I came away from the event with more questions for me to ponder, such as: ‘If the human and the machine were able to confuse each other with their identity, how should we manage and consider the possible consequences of this?’ My takeaway was that trust is a two-way street between the human and the machine.

Aspects of trust

I’d never considered how humans already trust animals and how this works, so the Guide Dogs talk was entirely different for me to think about; the power the dogs have and how the person has to trust the dog for the relationship to work. Also, the session of Dr Freedman where he discussed equating trust to a bank account brought the concept alive too. Ensuring that the bank account does not go into the ‘red’ is vital since red signifies a violation of trust, and recovery is difficult. Positive experiences reinforce trust, and thus there is a need to keep this topped up.

The area of trust also left me with a lot of questions which I need to think about how they will feature in my research, such as ‘Can you trust a person?’, ‘Do we trust people more than machines?’ and ‘Do we exaggerate our abilities and those of our fellow humans?’ The example of not telling the difference between a picture and a deepfake but thinking we can is undoubtedly something for us to ponder. As the previous example shows, there is a fallacy that a human is more trustworthy in some cases. Also, Prof Denis Noble suggested that we have judges because we don’t trust ourselves.

I have reflected on the importance of being able to define trust and trustworthiness. Doctor Jonathan Ives described trust as ‘to believe as expected’, whereas trustworthiness is ‘to have a good reason to trust based on past events’. The example he gave of theory and principle helps show this point in that the principle of gravity and the apple falling from the tree; however, we cannot view AI in the same way.

The discussion around trust being an emotion was fascinating because as a lawyer, it made me question how we could even begin to regulate this. I also wondered how this fit in with emotional AI and the current regulation we have. I believe that there may be a place for this in my research.

The global context of AI

This area considered whether there is an arms race here, and it was interesting to ponder whether any past technology has ever had the same disruptive capacity.

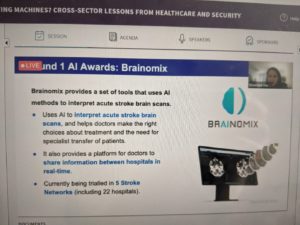

The value of data in healthcare

There were so many genuinely great examples showing how NHS data can help people in many situations, from imaging solutions to cancer treatment. I also found the Data Lens part very interesting, enabling a search function for databases within health and social care to find data for research purposes. The ability to undertake research to help medical prevention and treatment is excellent. I also found it interesting that the NHS use the database to reduce professional indemnity claims. I wondered about the parameters in place to ensure the use of this data for good.

The development of frameworks

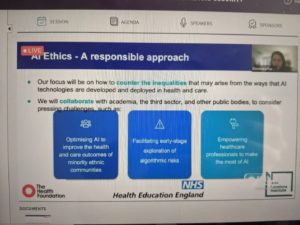

The NHSX is working with the Ada Lovelace Foundation to create an AI risk assessment like a DPIA. NHS looking to have a joined-up approach between regulators and have mapped the stages of this. I am looking for the mapping exercise and may request it if I’m unable to locate it. I was also encouraged to hear how many organisations benefit from public engagement and expect this from their innovators.

Overall learnings from the event

- Healthcare could derive a possible case study for my research

- I have more considerations to think about how to build trust into my research

- Regulation done in the right way can be a driving force for innovation

- Don’t assume that your technology is answering your problem

- It’s ok to have questions without answers

- Debating the problems can lead to interesting, friendly challenges and new ideas

- Massive learning point: understand the problem