post by Oliver Miles (2018 cohort)

Interning as an Embedded Research Associate with CityMaaS

Finding the right internship – introducing CityMaaS

From July-September 2021, I had the privilege of working as an embedded research associate with CityMaaS, a London based start-up in the digital inclusion space. This opportunity arose after pitching my PhD at a Digital Catapult networking event for students and start-ups. I prioritised attending this event as I was especially keen to experience work in a start-up environment. In the weeks following, I was introduced to Rene Perkins – CityMaaS CEO and co-founder. We agreed that at the intersection of her work on digital inclusion, and my work on values-driven personalisation, there was scope and mutual interest for a research project uncovering the drivers and barriers to digital inclusion adoption. Over a series of conversations, we discussed aims and objectives, ultimately formulating some questions and a target population. As I continue to write up research findings, I’ll talk only very briefly about research method and content. The focus here is more on the process of co-creating the ‘right’ internship, doing research work as an intern, and working as an embedded researcher within an external company. After introducing key concepts and CityMaaS products and services, I’ll talk about research participants, rationale and outcomes, reflections on navigating a specifically ‘research orientated’ internship and plans for future work.

Concepts, products, and services

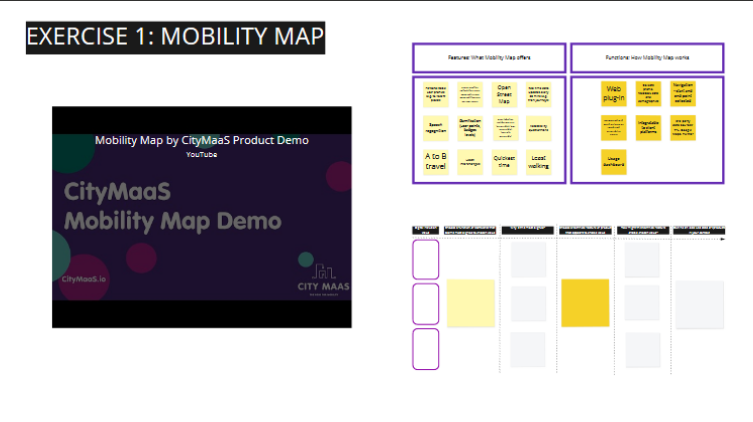

Digital inclusion is a far-reaching domain, but the focus of CityMaaS is specifically on applications of accessibility and mobility. CityMaaS software solutions include ‘Assist Me’ – a web tool for personalising the audio-visual and interactive content on websites; ‘Mobility Map’ – a mapping tool inclusive of machine-learning driven predictions of location accessibility and personalised route planning features; and ‘AWARE’ – an automated compliance checker, scoring and reporting a websites’ alignment with globally recognised web standards[1]. Improving accessibility online and offline is therefore, in a nutshell, the unifying objective for these solutions.

Who are digital inclusion solutions for?

People with additional accessibility and mobility needs – specifically those affected by conditions of visual, audio, physical and cognitive impairment – are ultimately the critical target end-users in terms of product interaction. Crucially though, they are not the clients: As a business to business (B2B) company, CityMaaS market and sell their solutions to public, private, and third-sector organisations with a view to improving their in-house digital inclusion offer; the general incentive being adding socio-economic value.

Why do corporate opinions and practices matter?

While the appeal of improving accessibility and mobility could/should be thought of as self-evident, if companies are to invest in bespoke solutions such as Assist Me, they need to not only be sure of its technical functionality, but confident it thematically aligns with their own conceptions of digital inclusion. Corporate clients therefore, were our population of interest for this work.

Research Activity:

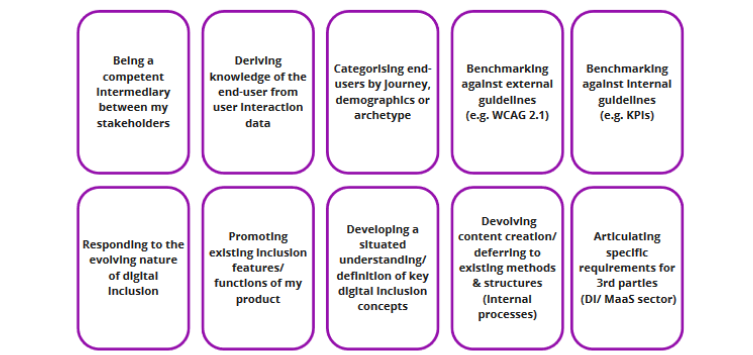

My work combined designing, conducting, and analysing interviews with senior heads of digital from 3rd party organisations known to CityMaaS, with the aim of answering an overarching question, ‘what are the drivers and barriers to digital inclusion?’. Results – from thematic analysis of semi-structured interview transcripts, expressed as 10 value themes driving or hindering accessibility and mobility – would go onto inform the design of an ideation workshop (Fig 1).

Interviewees and selected colleagues would then engage in specially designed accessibility and mobility solution ideation workshops, aligning features and functions of CityMaaS products with the 10 emergent themes (Fig 2).

Research Outcomes:

For CityMaaS, outcomes are aimed here at better defining the qualitative touchpoints for digital inclusion and discovering desirable uses for software, grounded in real-world values. For myself as a researcher of values-driven personalisation in the digital economy, this was a chance to explore emergent drivers and barriers as a values-orientated resource to digital solutions ideation in diverse corporate settings.

Reflections on navigating ‘researching’ and ‘interning’

There were several practical questions which required collaborative discussion with the CDT, most notably the nature of the partnership, data collection and storage, and my potentially conflicting status as both a doctoral research student and CityMaaS intern. We agreed the best framing of my status was that of an ‘embedded research associate’, as while I would be working alongside the CityMaaS team, my research would require university ethical clearance if results were to be useful to me in the wider PhD. As such, data collection and storage were conducted through university systems and protocol. I received no renumeration for my work with CityMaaS, with the prior agreement that research was explorative and not directly connected to business development activity on my part.

In terms of the nature of the work, the biggest challenges were those of project management, resourcing (providing interviewees, access to data) and ultimately ‘scope creep’. In terms of project management, mapping activities to a Gantt chart was personally beneficial, and I ensured that at numerous stages, there were deliverables which kept me accountable. For example, conducting initial requirements gathering sessions with CityMaaS business development and technical colleagues allowed me to hit an early goal of enumerating product features and functions, helping me to learn the product portfolio before interviewing participants later.

Delegation of activities also aided productivity where appropriate. As an example, my colleague in business development had much better access to interested and already connected corporate organisations than I did, meaning the substantive element of the internship wasn’t mostly generating participants.

The biggest danger though remained scope creep. Again, I found that effective project management and having short-term deliverables helped: I was able to complete internal requirements gathering, interview design, participant interviewing, analysis, and workshop design in the allotted 3 months’ time. Completion of ideation workshops though proved to be an ambitious final component; consequently, scheduled to take place in early 2022. While this remains realistic for me due to relatedness of the work to my PhD, if the project had to complete at any of the prior stages, outcomes were designed to be useful as standalone findings.

Conclusion & Future work

On reflection, I found the internship one of the most useful CDT activities for me in terms of both continued professional development and alignment with my own research interests. Moreover, I had never considered working in the digital inclusion sector before or had the opportunity to contribute to research in a start-up environment. As I complete workshops in February 2022 and write-up, I hope my findings are insightful to CityMaaS and useful to furthering my own understanding of values-driven consumption in the digital economy. I would also recommend the Horizon CDT network and partners at Digital Catapult, in terms of networking and finding bespoke internship opportunities.

[1] Based on the w3 (2018) Web Content Accessibility Guidelines (WCAG) 2.1 https://www.w3.org/TR/WCAG21/

[1] Based on the w3 (2018) Web Content Accessibility Guidelines (WCAG) 2.1 https://www.w3.org/TR/WCAG21/